This article is also available at Shibuya Learn

In April 2022, OpenAI introduced DALL-E 2, a groundbreaking creative AI tool capable of creating original, meaningful pieces of art based on simple text inputs. Thanks to DALL-E 2, anyone could generate unique and creative visuals in the blink of an eye for the first time in history. This technology paved the way for the development of new sophisticated creative tools powered by AI algorithms. It enabled the creation of photorealistic graphics and the transformation of existing images into new, AI-generated variations.

The vastness of creative AI tools 😵

Since DALL-E was released, other tools have literally flooded the markets: Midjourney, Stable Diffusion, ChatGPT, Runway, Kaiber, Lexica.art, Wonder, …

Consequently, the pace at which machines will write stories and generate images, videos, text, or other forms of data will only increase from here onwards. Endless new possibilities for fan fiction, co-creation, and media, in general, are emerging.

For creatives and artists, it can feel challenging to adapt to all the new possibilities and productivity gains brought by new generative AI tools. To help you get started, we want to provide an overview of some of the most important tools in this article!

So let’s follow the white rabbit and dive into the AI Rabbit hole 🐇

Tools to master ☝️

Most of the tools we are about to mention are popular for a reason. Many of them are rather easy to learn – but hard to master. Unfortunately, many of those tools stopped offering a free basic or trial version that allows you to test the tool. Use the free access to learn as much as possible and upgrade if necessary.

Midjourney (Image Creation) 🖼️

Midjourney has surely been among the most talked about creative AI platforms for the past year. With its current v5 version, Midjourney has made a huge leap forward and has become one of the go-to tools for creating generative art. Midjourney works through Discord: With specific commands, users can produce images by interacting with Midjourney’s Discord bot.

Unfortunately, they have recently pretty much abandoned their free plan. Due to extreme demand, they don’t provide a free trial at the moment. However, it is by far still the best tool on the market. Beautiful results are possible with limited knowledge or experience. The 8$ basic version is worth getting for beginners.

Here are 4 little steps you should follow to get started with MidJourney yourself:

Step1: Join the Midjourney Community Discord via this link, or through their site Midjourney.com

Step2: Enter one of their channels and type in /subscribe to receive your personal link to a subscription page.

Step3: Follow the link to activate your subscription.

Step4: Start prompting with the /imagine command. There are 3 ways to do this. You can prompt directly in the Midjourney server in one of the “newbies” channels, you can chat directly with the Midjourney bot via DM, or you can invite the bot to your own server!

Of course, that’s just the short version. The easiest way to learn this tool is by using it and looking for help in the community in Discord.

Hmmm, the delicious WAGMYU steak from the Shibuya.xyz Community. Generated with Midjourney V4.

Hmmm, the delicious WAGMYU steak from the Shibuya.xyz Community. Generated with Midjourney V4.

Once you get used to the prompting and generating, open up the MJ settings by using the command /settings. This allows you to easily alter the results and use different versions/models of the AI.

Stable Diffusion & Automatic1111 (Image & Video Creation) 🎨

Stable Diffusion

One of the most exciting breakthroughs in AI last year has been the development of the Stable Diffusion language model. This powerful and open-source text-to-image model can be run locally on your machine or on a server. In addition to the base model, it allows you to attach custom models that other people (or yourself) have trained. By fine-tuning these models yourself, you gain almost unlimited control over your generations.

In order to use Stable Diffusion based models (or Checkpoints as they are called) efficiently, you typically want a User Interface.

Automatic1111

AUTOMATIC1111 has become the Standard User-Interface that most people use today. It is also open source and can be installed on any machine by running a few simple commands in your Desktop’s terminal. What’s great about AUTOMATIC1111 is that it has a lot of different plugins that you can simply connect.

However, even though the community is passionate, it is not the easiest software to use and can be intimidating. Tutorials and installation guides are a must-watch!

The advantage of running a generative AI model locally is the freedom you gain. Once everything is set up, you can generate as many images as you want and use the tool as you like. Whereas most platforms that host these models for you limit you in one way or another. See Leonardo.ai for example.

ControlNet Example

(Top: Mirai ControlNet “film” version, Bottom: Original Mirai from White Rabbit Anime)

ControlNet

A feature that is especially helpful when using Stable Diffusion is “ControlNet”. Using ControlNet you can for example turn a film into an anime, and vice versa. It is a neural network structure allowing you to specify detailed conditions to the diffusion model. Simply put, ControlNet helps you explain to SD how the end result should be “built” using a template image as a reference. This allows you to guide what posture a person should have, or how the image should be composed.

The ControlNet technique can also be used to create videos (often in combination with other tools like Ebsynth). Here’s an Example starring Anime Bruce Lee:

My last name is Lee. Bruce Lee. pic.twitter.com/hc678dzRgO

— Bruce Lee (@brucelee) April 11, 2023

Sidenote: It is important to know that most AI tools that you can host on your own PC require rather powerful hardware, i.e. modern gaming graphic cards and CPUs. So check the respective system requirements before you start.

Dall-E 🖌️

As mentioned in the intro, DALL-E was the first first publicly noticeable breakthrough in generative image AI. Even though DALL-E’s image generation results are not as detailed as for example Midjourney’s, what Dall-E does offer, and often does very well, is easy inpainting and outpainting for existing images.

Inpainting

Inpainting is a feature that allows you to select a certain area in your image and replace the content of this area via text prompts.

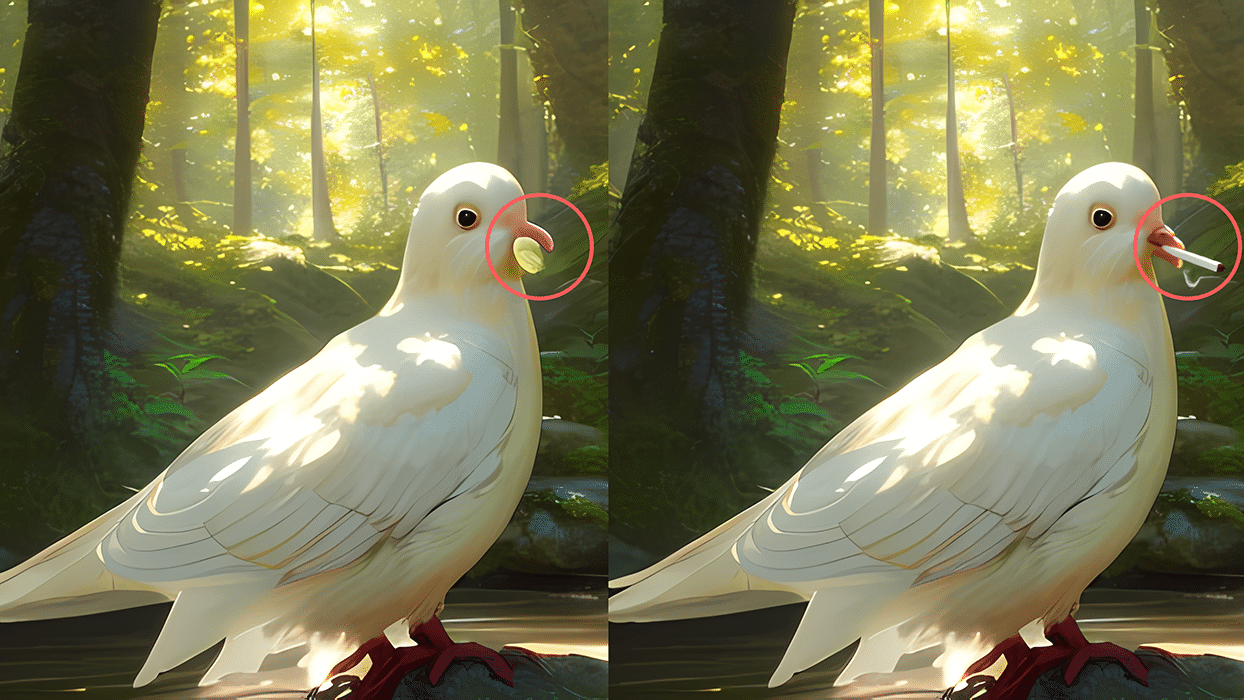

Adding a cigarette to the beak with Dall-E Inpainting.

Adding a cigarette to the beak with Dall-E Inpainting.

Keep in mind, that it might take a few tries until the result really fits. We have taken a small detail here, but you could also try to replace a person in an image e.g.

Outpainting

Outpainting is, as the name suggests, not changing the content of your original image but extending it by generating content around it.

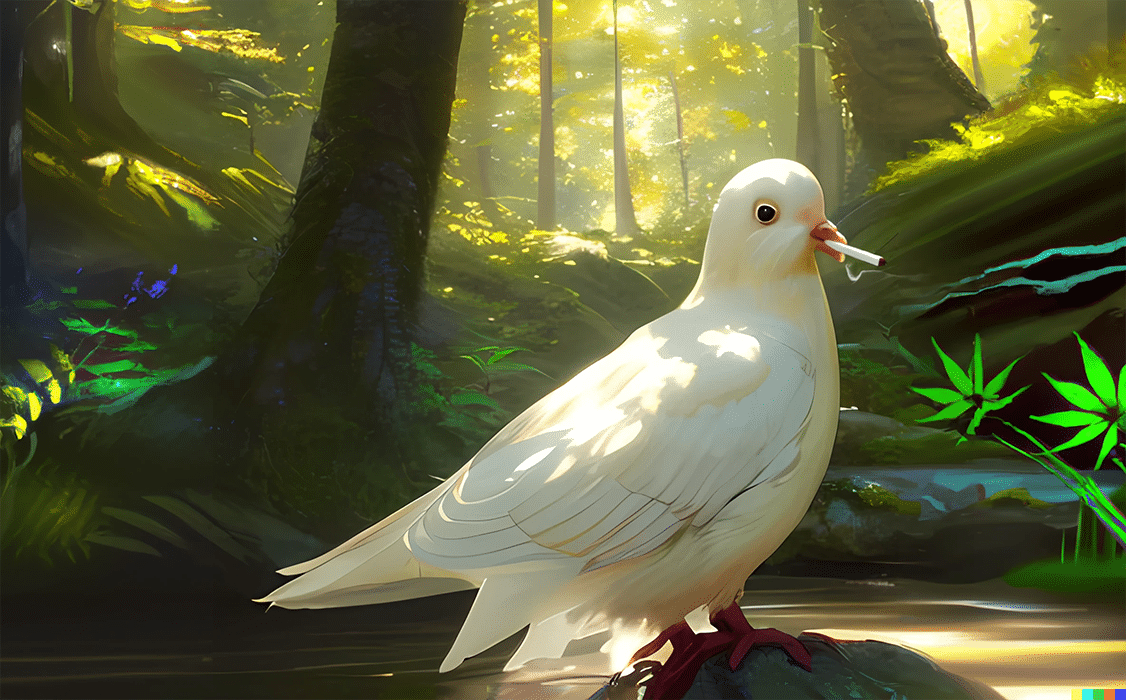

Outpainted (left & right) version of our dove with the cigarette in its beak.

Outpainted (left & right) version of our dove with the cigarette in its beak.

Again, you may have to adjust your prompt a couple of times to get a better result. You can also combine Outpainting and Inpainting to achieve even better results:

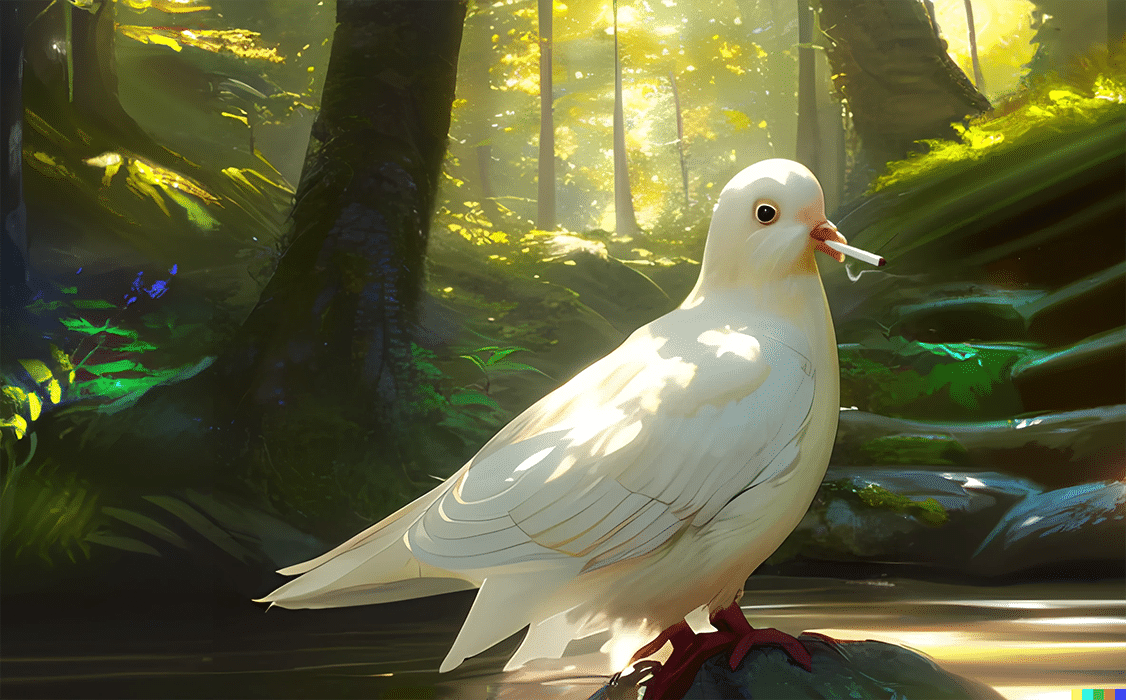

Outpainted version after editing the right side with the inpainting tool.

Outpainted version after editing the right side with the inpainting tool.

ChatGPT (Text) 📝

GPTs (Generative Pretrained Transformer) are large language models that use deep learning to produce human-like texts. Many of these models are tailored to respond to messages, come up with texts or even take over simple coding tasks. Like a ChatBot assistant on steroids.

You can incorporate these AIs into every imaginable scenario in your personal and professional life. Need inspiration and a shopping list for a new recipe? Ask ChatGPT. Need a summary of an article? Give it to ChatGPT and ask politely.

This goes as far as letting GPT models write entire business models for you.

With recent developments of tools like AutoGPT that connect ChatGPT to the internet, we have reached a point where such a model is able to not only respond to your prompts but to impose and track a whole series of tasks on itself in order to achieve your given goal. All automatically without your help, hence “AutoGPT”.

At this point in time you are still able to create a free Chat-GPT account that gives you access to GPT-3.5 whenever demand is low. If you want to use GPT-4 and get faster responses you have to upgrade to the pro plan.

Runway ML 📹

Another major AI platform has been Runway ML, and more specifically their tool called GEN-1. They provide powerful AI video generation and editing tools like background removal, inpainting, or motion tracking. Furthermore, their Text-to-Video AI GEN-1 lets you create videos snippets from text inputs.

While GEN-1 is already an impressive first step, we’ve found that many of those tools are still early and the results somewhat strange (or artsy). But we expect even more mind-blowing results in the future.

However, even if the results are not super convincing yet, now is the time to get involved with the technology to gain first experiences – especially as long as some of these companies still offer free trial access.

ElevenLabs 🎤

Another exciting advancement in AI has been the emergence of Voice Generation platforms like ElevenLabs. This tool can clone any voice it is given using just a few minutes of voice recordings. Which makes it perfect for producing troll posts and videos about former presidents.

The tool is pretty straightforward, making it perfect for AI beginners. All you have to do is record or extract a few minutes of clean audio with the voice you want to clone. Upload the audiotrack to the platform and let the AI do all the magic.

Be aware that results will only be good if the quality of the original sound is also good and if there is no additional background noise in your recording. Also, the more material you give the AI to train on, the higher the chance of a good result.

To synchronize the voices we have generated with video snippets, we currently use an older open-source project called “Wav2Lip”. You can find it here and try it out for yourself, however, you should note that its usage is a bit more complicated.

Bonus: Adobe Creative Cloud

Combining Midjourney images with small effects

The Midjourney prompt for this Ramen:

A delicious bowl of ramen on a table, a digital painting in the style of akira manga, stars in the background, high quality, a restaurant in space, wagyu beef –ar 16:9 –v 5 –no human woman man

Even though the above-mentioned tools already deliver really good results out of the box, the results may not be exactly what you wished for. A tiny detail might be off, or you may want to add some text, or other effects to your images.. Therefore it is still important to be able to use the “good old tools”, like the ones that come with the Adobe Creative Suite.

Adobe has already been heavily implementing more and better AI features into their toolbox as well. In fact, Photoshop has had amazing new AI features for years. Additionally, they have also just recently launched their new AI tool called Adobe Firefly. Image and video editing has never been this easy, but still, practical knowledge of these “manual” tools is as important as ever if you want to customize your AI creations.

Resources to bookmark

Text-to-Image tools:

Dall-E, Midjourney, Stability

Text-to-Text AI:

ChatGPT, Copy.ai, Jasper

Databases with tons of AI tools:

Futuretools.io, Creaitives.com, Cogniwerk.ai

Other useful resources:

Midjourney Reference Sheet, ChatGPT Prompt Repository, Hugging Face Community

Conclusion 🤔

In conclusion, the advancement of artificial intelligence enables smaller teams to achieve remarkable results in less time than ever before.

However, this does not mean that the process is easy, as there is limited online learning material and no precedence to follow. The need for human creativity to work around the AIs is still necessary, at least for now. And staying up-to-date with the latest tools and developments to unleash the full potential of this technology is more important than ever.