“With great power comes great responsibility”

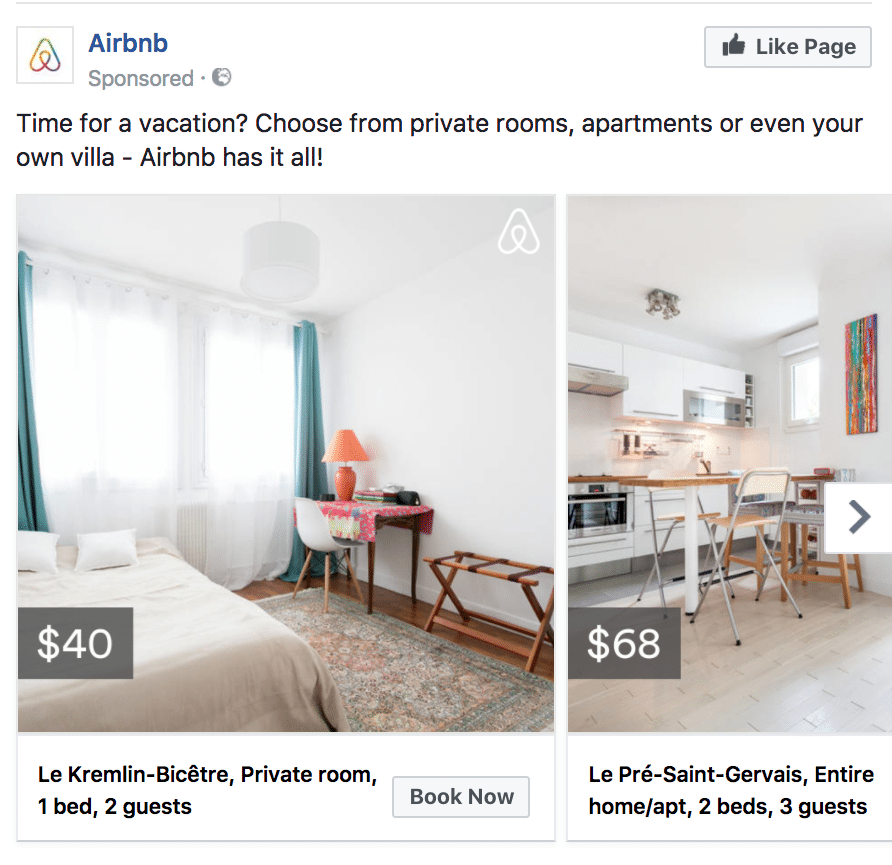

Did you ever wonder how it’s possible that minutes after you were browsing bungalows in Bali on AirBNB, ads appear all over your browser and social media suggesting you more beautiful rooms around the exact same place on the island? If yes, you’re not alone. Marketing and technology experts worldwide are constantly coming up with new creative and intelligent ways to leverage every single piece of data available and it’s becoming increasingly hard to keep up for users.

Online marketing has become too important for businesses to ignore it.

Pretty much every major consumer brand has entered the digital market, using social media to build relationships with their audience and expand their customer base. Some of these advertisers are doing basic social media communication by posting pictures and videos, others have learned to exploit the possibilities of targeted ads to their full potential to actively manipulate public opinion in their favour. Sometimes through loopholes, on other occasions through ethically questionable methods. Many of those companies operate in grey areas since the technology is changing so fast that lawmakers struggle to keep up with technological progress. As a consequence, the digital landscape, specifically in areas like cybersecurity and data protection, resemble the gold rush in the 1840s. Data is one of the many forms of this new gold and new players are entering the market on a daily basis to amass the unclaimed digital nuggets we leave behind when browsing the web and using their services. This rush for data and the possibilities emerging from an unprecedented amount of data is reaching a point at which it threatens our concepts of freedom and democracy.

In the western world, we had 2 major wake-up calls.

We have all been direct and indirect witnesses of two recent examples of real-life implications due to targeted manipulation using big data: Brexit and Donald Trump’s election. Thought impossible by many, the backlash of both events made people wonder how these turnouts were actually possible. How could this happen?

After the Cambridge Analytica scandal in 2018 and data breaches at Facebook, several CEOs of relevant tech companies were called to testify in front of different juries. Each time the main subjects were privacy issues, questions about manipulation and abuse of power (the most prominent cases being Marc Zuckerberg of Facebook and recently Sundar Pichai, CEO of Google). It should be obvious that the Cambridge Analytica media scandal was only the tip of the iceberg and that there’s a lot more going on that never even reaches the public discussion.

During Sundar Pichai’s hearing, Congressman Bob Goodlatte said the following in his introduction about accepting terms of use: “I think it is fair to say that most Americans have no idea on the volume of data and information that is collected (by Google)”. The same can, of course, be said for Facebook and every other major tech platform on the planet that requires its users to agree to some infinitely long Terms of Service. In Europe, measures like GDPR aim to improve on this matter by forcing the companies to be short and clear about which data they collect and how they use it. While European companies are actively required to adapt, companies on other continents, notably the United States, are building and promoting entire industries built around these questionable models. As a user, you comply, or you miss out.

A new business model

Many of the huge tech platforms (Amazon, Google, Instagram, Snapchat…) are heavily relying in the amount of the data they’re collecting and the monopoly they built around it. These platforms flourish from knowing what you like, oftentimes before you know it yourself, and they’re serving it to you on a silver plate. Services like Google, Google Maps, Facebook and Instagram are often perceived as “free” when in reality they are not.

When Mark Zuckerberg was asked in one the trials whether Facebook would “sell user data” he said:

“We do not sell data to advertisers […] what we allow is for advertisers to tell us who they want to reach. And then we do the placement.” Under the line, it’s pretty much the same thing.

“If the service is free, YOU are the product”.

The difference that many people yet have to internalize is that they no longer pay with money but with their data, which is aggregated and monetized by distributing targeted advertisement.

A recent study by Varn estimated that two-thirds of people cannot differentiate between organic Google search results and paid results (although they are literally tagged with an “ad” icon). This inability to navigate the digital sphere in an undeceived way makes our society vulnerable to abuse. Scenarios like Russia manipulating the 2016 elections using Facebook, Google and Twitter ads or the president of the United States using illegally acquired data to win the election, seem to be straight out of the newest sci-fi thriller, while this is happening right now, in front of our noses.

Donald Trump and online quizzes

The Cambridge Analytica case was probably the most prominent data scandal of 2018. What happened? Donald Trump’s campaign team hired Cambridge Analytica, a “political research” company to support the electoral campaign. To do so, the company programmed quizzes on Facebook in order to gain (unauthorized) access to sensitive user data. Furthermore, through exploiting vulnerabilities in the system, the company managed to collect data of people’s friends on Facebook that did not even take the quiz, which is the real scandal. To be clear, similar scenarios happen all the time and Cambridge Analytica was not the only company to exploit these kinds of vulnerabilities and also probably not even the biggest fish. Regulation is in its early stages and although many of those practices are highly unethical, they are not (yet) illegal.

Through said quiz, the company managed to get access to data of over 50 million Facebook profiles without notifying users that their data was being recorded. This data was then used to create psychographic user-profiles. In this case, Cambridge Analytica combined the data with behavioural psychology based on the so-called OCEAN model. This model allowed the company to predict which approach was most efficient to influence opinions of different personality types. In other words, people saw advertisement tailored to their personality, designed to trigger them or make them adopt a certain opinion.

Alexander Nix, former CEO of Cambridge Analytica explained this in an example referring to the right to carry a firearm:

“For a highly neurotic and conscientious audience, you’re going to need a message that is rational and fear-based or emotionally based. In this case, the threat of a burglary and the insurance policy of a gun is very persuasive. Conversely to a closed and agreeable audience, these are people who care about tradition, habits, family and community, this could be the grandfather that taught his son to shoot and the father who will in return teach his son.”

The actual efficiency of these ads has been subject to heated debates, but one way or another the potential of manipulating masses by gaining unauthorized data of people has been demonstrated very clearly by this case.

Considering the incredible pace at which services are evolving, it is virtually impossible for companies to find data-breaches before third parties start exploiting them and in many cases Facebook, Google and co. don’t seem to care much about it. As a matter of fact, a few years ago Sandy Parakilas, platform operations manager at Facebook, was in charge of monitoring and policing data breaches by third-party services and warned the company about these kinds of risks and criticised Facebook for not taking her warnings seriously enough.

The incredible number of data-breadcrumbs we are leaving when browsing every day combined with many users’ blind trust in tech giants not only poses a threat to our privacy but even to democracy as such. From this emerges an entirely new discussion about digital ethics and the implications of cybersecurity and data protection for the real world.

For businesses, strategies based on audience psychology like the ones Cambridge Analytica used can be highly profitable if applied in the right way. It’s one of the main elements of modern online marketing strategies, so every entrepreneur needs to ask her/himself:

How can you benefit with a clear conscience?

And how do efficient strategies actually work and convert?

While companies like Facebook and Google do not give regular clients access to the user information, they let you create targeted audiences, track user behaviour on your website or re-engage with users that engaged with your website previously via so-called remarketing strategies. On Facebook, advertisers are limited to display ads to audiences as small as 1000 people. Interestingly enough, a few years ago it was possible to target even smaller groups, but for “data protection” reasons Facebook was forced to adapt their system. When creating classical ads, marketers can choose between demographical factors and user interests. User interests are made up of the aggregated data of user behaviour, which simply means that if you regularly like Facebook pages or make Google searches related to Bayern Munich for example, the platforms will attribute this interest as well as broader interests like football to your profile.

If you were running a shop that sells football shirts, for example, it wouldn’t make sense to show your Bayern shirt to Borussia Dortmund Fans. Additionally to this more conventional marketing, you can have a remarketing strategy in place to re-engage with users once they were on your website.

To give you a real-life example of remarketing, let’s have another look at the Airbnb example from the beginning:

When you register with Airbnb, you either sign up “using Facebook” directly, with your e-mail address, which oftentimes is the same one you use on Facebook. The connection is thus easily established. If you now browse Airbnb’s website or mobile app while logged in, they can later show you customized advertisements in relation to what you were looking up on Facebook using a tool called the “Facebook Pixel”. This is why you see AirBNB ads for that beautiful bungalow on Bali after you looked it up on their website.

Advertising via big platforms like Google and Facebook is generally GDPR compliant and as long as you do not use questionable third-party data-sets you don’t need to worry.

As an online marketing agency, the best advice we can give you is to make your ads relevant to your audience, because that’s what targeting is about. As long as your ads are relevant to your audience they won’t feel bad about you targeting them. A Dortmund Fan will not convert to a Bayern fan, just because they see your ad 6 times per week.

How to handle data security?

Both, end consumers and companies have to make choices and take responsibility for these choices and their possible consequences.

For end consumers, this means having their behaviour tracked for the sake of a better user experience or going back to random, spammy ads like in the good old days on TV.

Marketers, on the other hand, are faced with the decision to use the new possibilities in a responsible way to grow their business- or move in a grey zone and risking severe consequences, just like Cambridge Analytica which is now out of business.

In the end, it comes down to common sense where everything is permitted as long as there are transparency and consent. We are going to see these patterns repeat themselves until we come to a collective understanding of the implications and responsibilities that emerge with the use of these new technologies for governments, companies and the individual. Since the dawn of online marketing there have been white-hat marketers, with a sense of ethics and responsibility opposed to black-hat marketers, that use obscure techniques like Cambridge Analytica did. It is the latter that pose a threat to fair practices and our society if we allow them to process data in ways that are invisible to the public.

“With great power comes great responsibility” – Uncle Ben

Tips to protect yourself

You can actually see which apps have access to your data on Facebook and Google.

For Facebook, access your settings and hit up the “apps and websites” tab to see and manage access of apps and websites to your profile.

To manage Google ads preferences go to https://adssettings.google.com/authenticated. Here you can disable personalized ads, but be aware that third parties can still track and serve you ads.

To find out which third parties are collecting data to customize ads and manage permissions, go to http://www.youronlinechoices.com/lu-fr/controler-ses-cookies.

Revoking the tracking permission does not mean that you won’t see ads anymore. It only keeps those companies from using your data for targeted ads.

To see which advertisements a Facebook page is running, simply add “/ads” behind their Facebook url, and you’ll have a complete list of all their active ads. On the Facebook smartphone app you can access the same page by clicking the small, round “i” icon next to a page’s cover photo.

If you want to know how the cover images for our blog posts are made, have a look at this video from our Youtube channel:

This article was wirtten as a guest article for Silicon Luxemboug and is also available on medium.com.